LU-SGS versus DPLUR

Comparison of Implicit Methods Available In Aither

Aither v0.3.0 contains four implicit methods for solving the system of equations selected. Lower-Upper Symmetric Gauss Seidel (LU-SGS) [1], Block Lower-Upper Symmetric Gauss Seidel (BLU-SGS) [2], Data Parallel Lower Upper Relaxation (DPLUR) [3], and Block Data Parallel Lower Upper Relaxation (BDPLUR) [4]. These four methods are similar in nature but have different performance characteristics depending on the problem at hand. At their core they all involve solving a system of equations. We start with the implicit discretization cast in delta form as shown below where .

In the above equation is a x block matrix where each block is x . is the number of cells in the domain and is the number of equations being solved in each cell. and are x 1 block vectors where each block is of size x 1. As you can see solving the Navier-Stokes equations implicitly boils down to solving the cannonical linear algebra problem . All four of the implicit methods within Aither start from this discretization. They differ only in the approximations made in constructing the matrix , and the solution method. All four methods approximately solve , where LU-SGS and BLU-SGS use the Gauss Seidel method and DPLUR and BDPLUR use the Jacobi method. Since is being solved approximately, there is no reason to waste computational expense to accurately calculate . Since is being approximately calculated it is factored into lower trianguler, diagonal, and upper trianguler matrices.

All of the methods make varying approximations in constructing the implicit matrix. The LU-SGS and DPLUR methods approximate the , , and matricies with their spectral radii, while the BLU-SGS and BDPLUR methods use the full x matrix on the diagonal. The approximations made by LU-SGS and DPLUR allow them to use less memory as they do not need to store a full matrix for , but only a scalar value. They are also more computationally efficient as the inversion of the matrix is trivial. The BLU-SGS and DPLUR methods make less approximations and therefore should converge in fewer iterations. However, they are more computationally expensive and require more memory. Using the full matrix on the diagonal hurts the diagonal dominance of the linear system making these methods less stable. They may require a lower CFL number than their scalar diagonal counterparts.

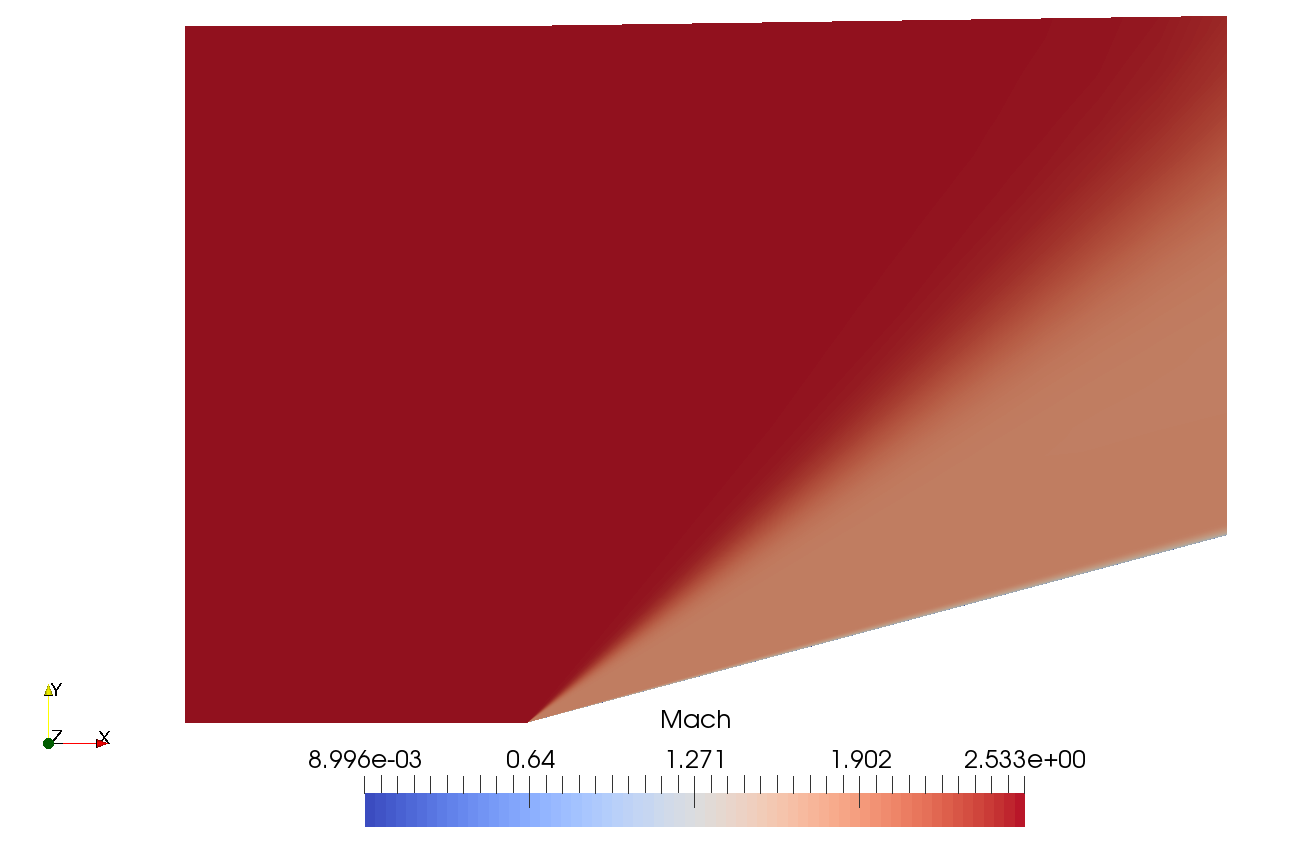

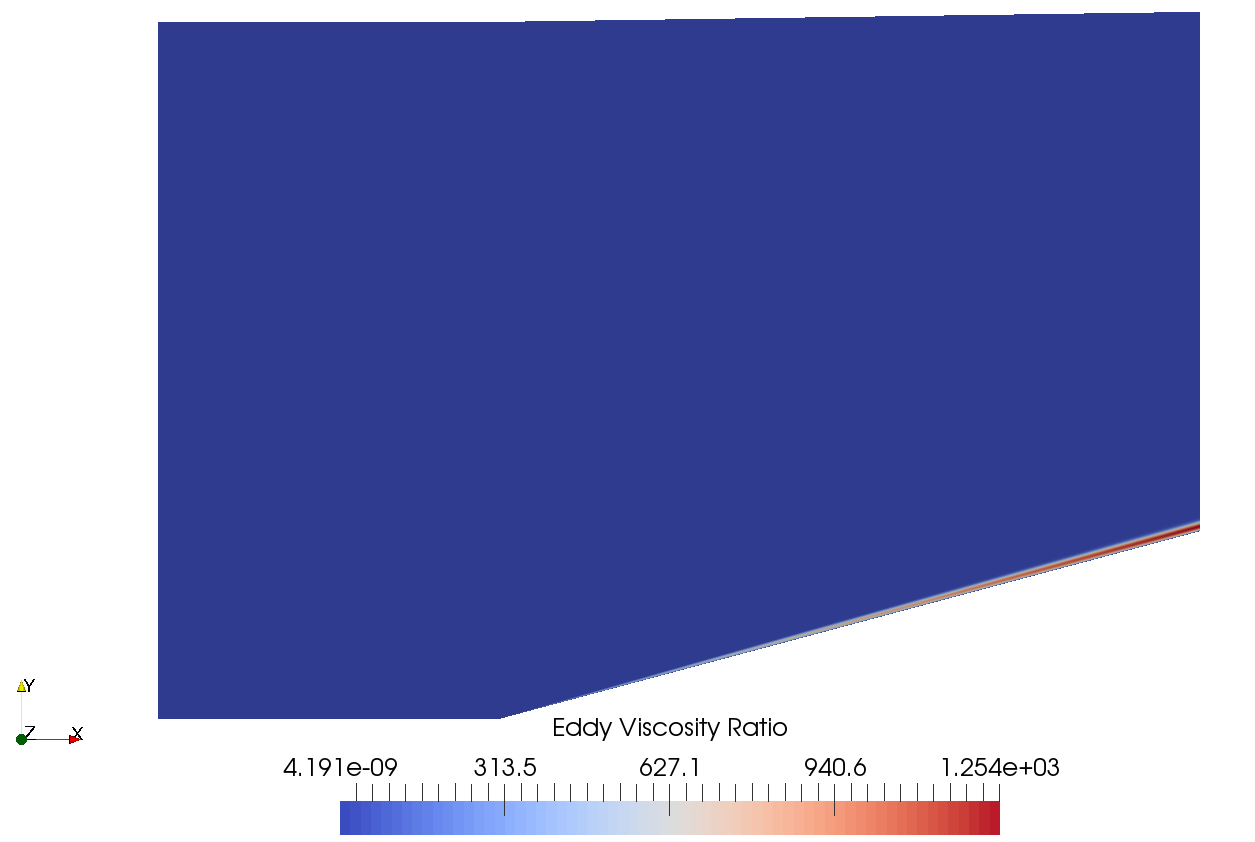

Supersonic Wedge

To compare these implicit methods the simulation of supersonic turbulent flow over a 15 degree wedge was used. Freestream conditions of 23842.3 , 0.379597 , and 739.9 were used. The Reynolds-Averaged Navier-Stokes equations were solved with the Wilcox 2006 turbulence model. The block matrix methods became unstable when the default freetream eddy viscosity ratio of ten was used, so for these simulations all methods used 0.001. The grid used was 101 x 121 x 2 with near wall spacing of to ensure a value less than one. The simulations were solved with second order accuracy in space using MUSCL reconstruction with Aither’s thirdOrder option. The minmod limiter was used to avoid spurious oscillations near the shock. Contours of Mach number and turbulent eddy viscosity are shown below.

Implementation Of Implicit Methods

The implicit methods differ primarily in their construction of the , , and matrices. Since the and matrices are constructed in a similar was as the matrix, only the matrix will be shown here. The LU-SGS and DPLUR methods use an identical matrix. The BLU-SGS and BDPLUR methods use an indentical matrix as well. In all methods the matrix is the only one that is stored, while the and matrices are calculated on-the-fly. The mean flow and turbulence equations are handled separately, so two scalars are used for in the LU-SGS and DPLUR methods. For BLU-SGS and BDPLUR a 5 x 5 matrix and a 2 x 2 matrix (for a two equation turbulence model) are used. The inviscid flow jacobian follows the derivation in Blazek, and the viscous flow jacobian uses the thin shear layer approximation and the derivation shown in Dwight. The following definitions are used in the equations below: , , , and

Once the matrix is calulated and stored, the off-diagonal and matrices are computed on-the-fly during the matrix relaxation procedure. For the scalar methods the off diagonal is further approximated as shown below. For the block methods, the full matrix-vector multiplication is done on the off diagonals.

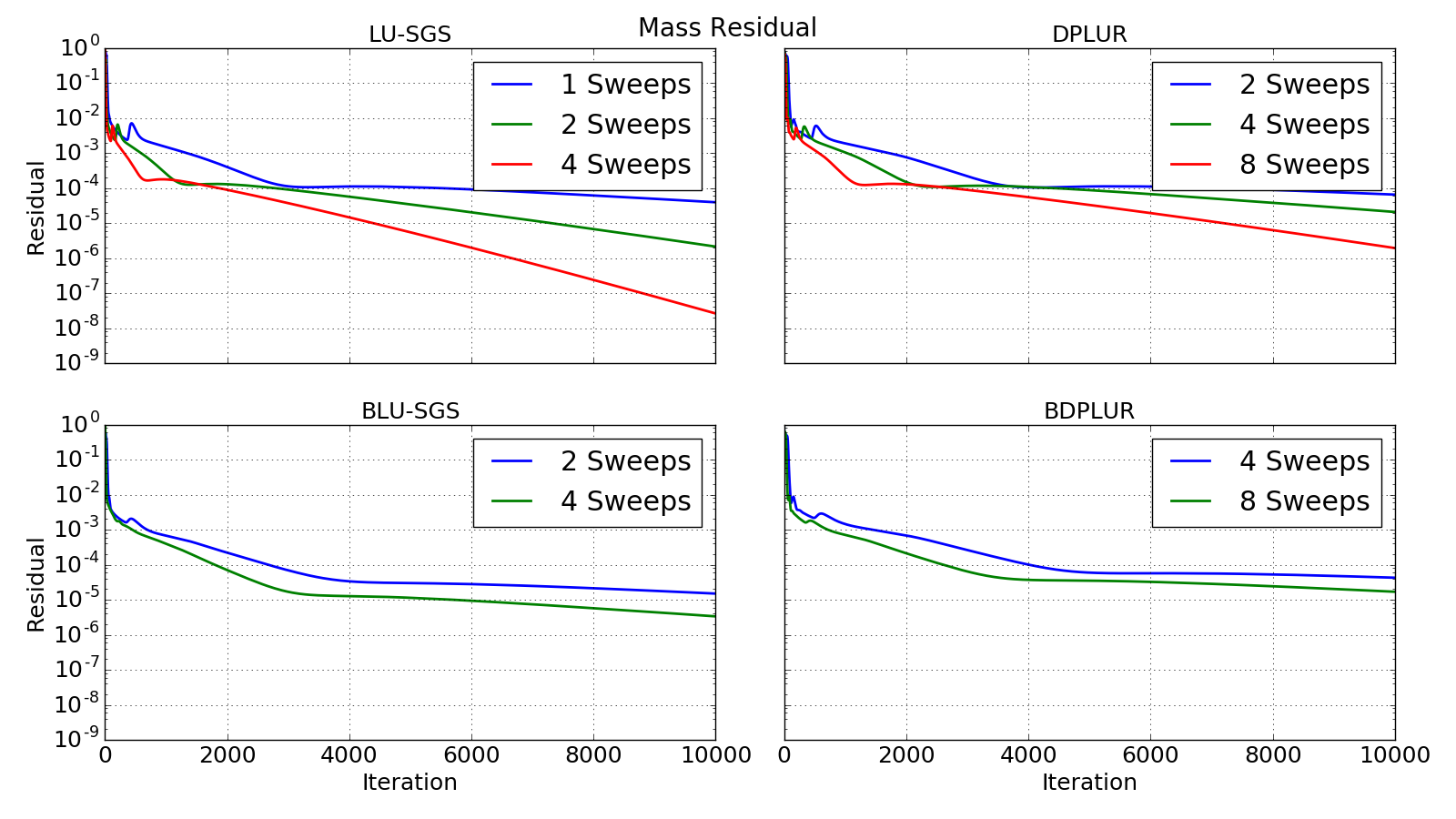

Results

The four implicit methods were used to solve the supersonic wedge varying the number of sweeps (Gauss Seidel or Jacobi). The simulations were run for 10,000 iterations in parallel on 4 processors. Aither was compiled with GCC 6.1 and OpenMPI 2.0.0 on Ubuntu 16.04. The processor used was a quad core Intel Core i7-4700MQ @ 2.4GHz. Each simulation was only run once, so this was not a rigorous timing study. The table below shows a summary of all the cases run including the final mass residual L2 norm relative to the highest mass residual within the first 5 iterations. The matrix relaxation used is also shown. All cases except the BLU-SGS and BDPLUR cases with the lowest number of sweeps used the default value of 1. Since the block matrix methods have worse diagonal dominance, relaxation is occassionally needed to aid stability. All simulations were run using local time stepping with a CFL number of 1e5.

| Method | Sweeps | Relaxation | Final Mass Residual | Simulation Time (s) |

|---|---|---|---|---|

| LU-SGS | 1 | 1 | 3.9302e-5 | 477.4 |

| LU-SGS | 2 | 1 | 2.1304e-6 | 612.8 |

| LU-SGS | 4 | 1 | 2.6281e-8 | 893.7 |

| DPLUR | 2 | 1 | 6.5039e-5 | 503.3 |

| DPLUR | 4 | 1 | 2.0773e-5 | 603.9 |

| DPLUR | 8 | 1 | 1.9359e-6 | 870 |

| BLU-SGS | 2 | 1.1 | 1.4966e-5 | 2013 |

| BLU-SGS | 4 | 1 | 3.3500e-6 | 3223 |

| BDPLUR | 4 | 1.2 | 4.2507e-5 | 2057 |

| BDPLUR | 8 | 1 | 1.6883e-5 | 3319 |

The same behavior is shown with the residuals for the other equations, so they are omitted here. The number of sweeps for the DPLUR based methods was doubled compared to their LU-SGS based counter parts because the DPLUR methods use a Jacobi relaxation instead of the 2x more efficient Gauss Seidel relaxation. It is expected that the block matrix based methods take longer due to the increase in computational effort required. However these methods will likely improve in later versions of Aither as a linear matrix library such as Eigen or PETSc is slated to be used. Based on the literature it is expected that the block matrix methods should perform better on highly stretched grids such as this one used for a RANS simulation. However, for this case the benefit of the block matrix methods is not observed. Previous simulations have shown a small benefit to using the block matrix methods in some cases, but usually not enough to justify their extra cost.

Conclusions

For the supersonic wedge case analyzed here, LU-SGS has the best performance. It is stable and efficient. The block matrix based methods show rather poor performance in terms of residual drop and simulation time, however the latter is expected to improve in future versions of Aither. For cases other than this one, the block matrix methods have shown better convergance than their scalar counterparts, and no need to increase the relaxation factor from the default value of 1. How do these results compare with your experience? Comment below!

References

[1] Yoon, S and Jameson, A. Lower-Upper Symmetric-Gauss-Seidel Method for the Euler and Navier-Stokes Equations. 1988. AIAA Journal Vol 26 No 9.

[2] Chen, R. F. and Wang, Z. J. Fast, Block Lower-Upper Symmetric Gauss-Seidel Scheme for Arbitrary Grids. December 2000. AIAA Journal Vol 38 No 12.

[3] Candler, G. V. et al. A Data-Parallel LU-SGS Method for Reacting Flows. 1994. AIAA 94-0410.

[4] Wright, M. J et al. Data-Parallel Lower-Upper Relaxation Method for the Navier-Stokes Equations. 1996. AIAA Journal Vol 34 No 7.